Easy Answers - Providing Feedback

Intended audience: USERS

AO Easy Answers: 4.3

Overview

The Easy Answer AI Enhanced LLM Questions overview typically encompasses the various key concepts, functionalities, and considerations involved in using and interacting with the LLM.

AI Enhanced mode has a very advanced way of interpreting user questions, including suggesting missing values or topic content that are either missing or cannot be derived from the question. Word typos are also dealt with more seamlessly.

To help the user understand the process, various messages will be shown on the screen as seen in the following video clip:

Providing Feedback

Once a result page has been generated, the first line of the page will show how many Apps were generated, the final question (with options to update known words), and two icons to allow the user to provide their satisfaction with the results.

Thumbs Up - a simple acknowledgment will be shown at the top of the screen.

This will signal to the system that the user is satisfied with the response. No further feedback will be requested. Additionally, validated questions and their corresponding results will also save the question interpretation (not the resulting data) to the Cache to improve performance when the same question is asked in the future.

This overrides any other Ontology level setting, forcing questions not to be cached.

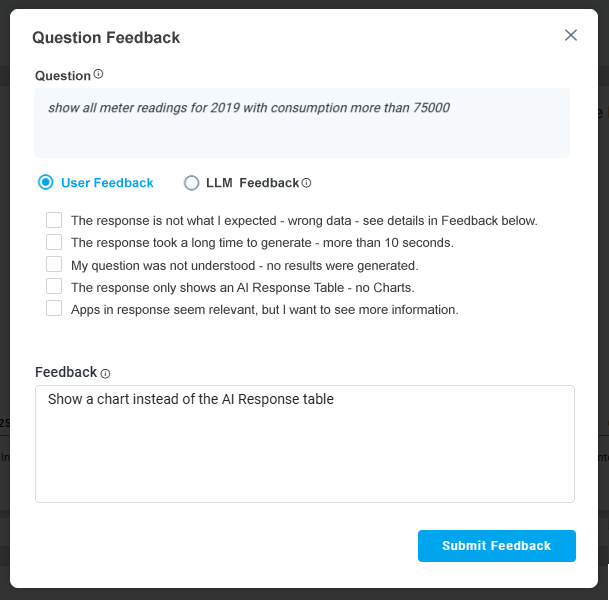

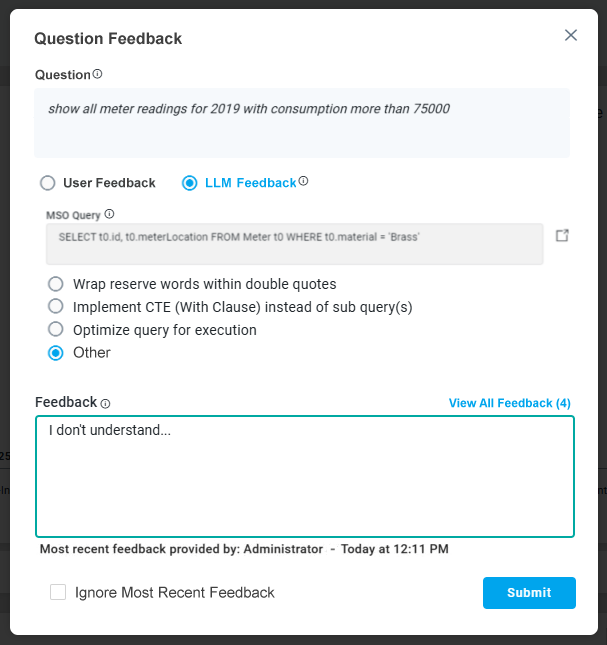

Thumbs Down - opens a dialog where the user can provide some input as to why the thumbs-down icon was clicked. Provide as much information as possible. When clicking the Submit button, the Feedback will be sent for approval (or rejection) by a “supervisor” user with additional permissions to update the Ontology to benefit future users asking the same or similar questions.

User Feedback | LLM Feedback |

|---|---|

This radio-button option is available for simpler, non-technical feedback on the user experience about how the question was interpreted by the system and the responses provided to the question. The User Feedback does not immediately change the question interpretation by the Large Language Model but will be submitted for review. | This radio-button option is available for technical users with insight into database queries and allows for feedback to aid the system to be updated with prompt instructions, linguistics words, or other changes to allow improved interpretation of future user questions. The LLM Feedback will immediately impact how a question will be interpreted by the Large Language Model as any Feedback provided will be added to prompt instructions when the question is retried. |

|  |

Technical Insight on LLM Feedback Options

LLM Feedback Options | When to Use | Why |

|---|---|---|

Wrap Reserved Words Within Double Quotes |

|

|

Implement CTE (WITH Clause) Instead of Subquery |

|

|

Optimize Query for Execution |

|

|

View All Feedback Dialog

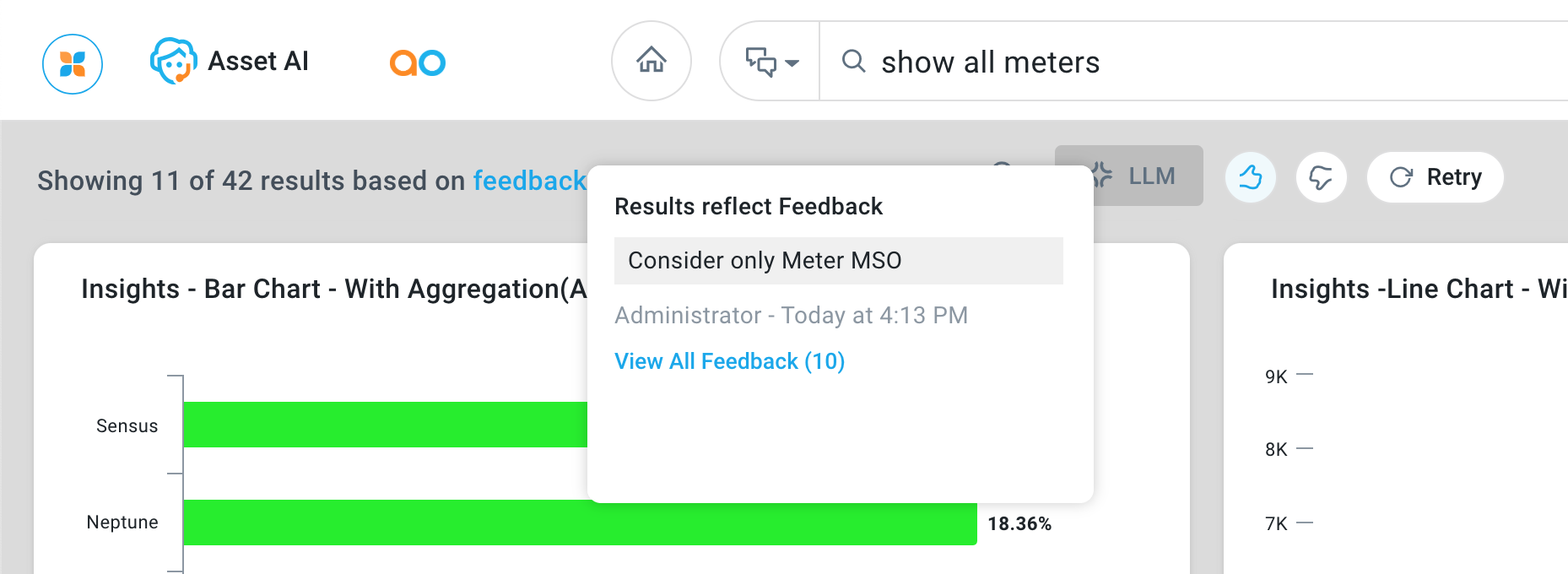

When LLM Feedback is provided and therefore the data presented in the Apps on the Results page may have changed, the user can view the Feedback that impacted the question by clicking on the “Feedback” link highlighted at the top of the Results page.

If the user is interested in viewing all Feedback, another link will be shown in the popup dialog for most recent question Feedback. The View All Feedback dialog can also be opened from the Options menu in the Question field. See Viewing Feedback History.

To view the full Question History and associated Feedback for all unique questions, see Viewing All History.

For technical/power users, the Review Feedback workflow screen allows for management of the Feedback for either current user questions, or all questions, including updating Prompt Instructions, Words (Synonyms), and any other Ontology updates needed to improve how LLM-based questions are being interpreted and results provided. See Reviewing Feedback for LLM-Based Questions.